Key research themes

1. How can transaction-level modeling (TLM) enhance the speed and accuracy trade-off in DRAM simulation frameworks?

This research area focuses on the development of DRAM memory simulators that use transaction-level modeling techniques to accelerate simulation speed while maintaining cycle accuracy. As detailed timing and power behavior of DRAMs become critical for system-level design and exploration, simulators must overcome the bottlenecks typically caused by cycle-accurate models. TLM approaches evaluate state changes only upon memory transactions rather than every clock cycle, substantially increasing simulation throughput without losing essential temporal accuracy. This balance is vital for designing modern DRAM controllers and adapting to evolving JEDEC standards, including DDR5 and LPDDR5.

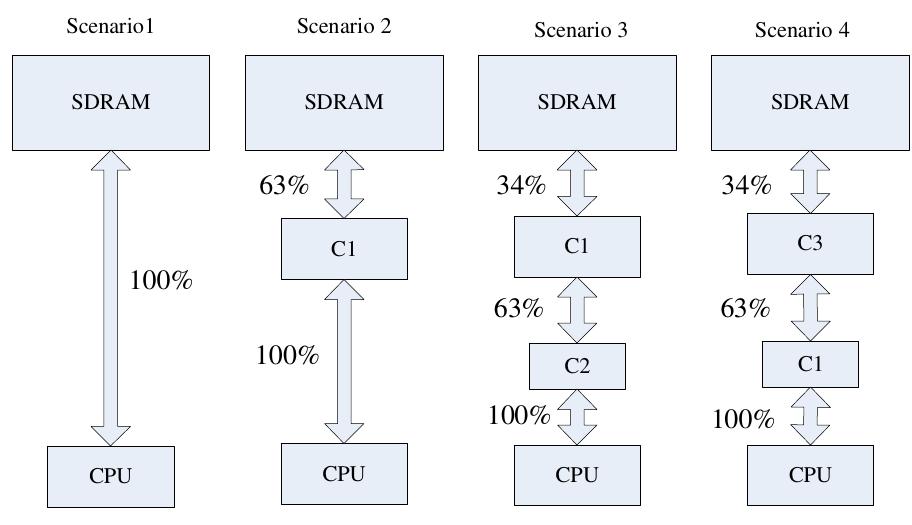

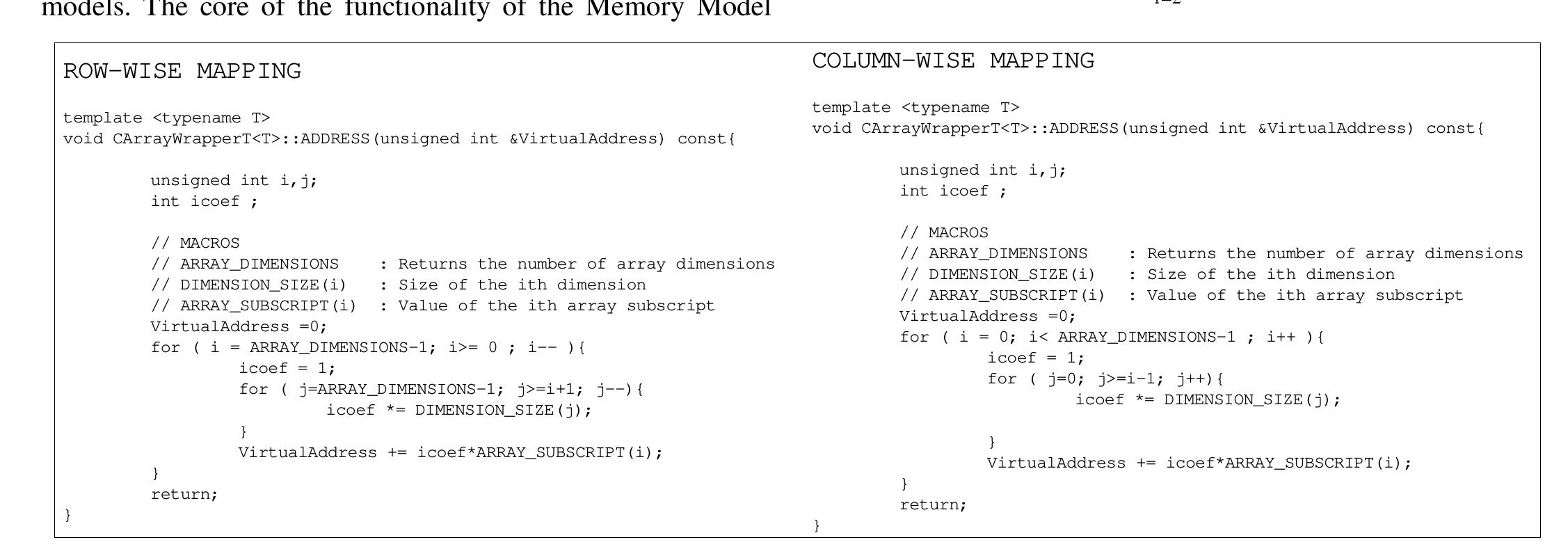

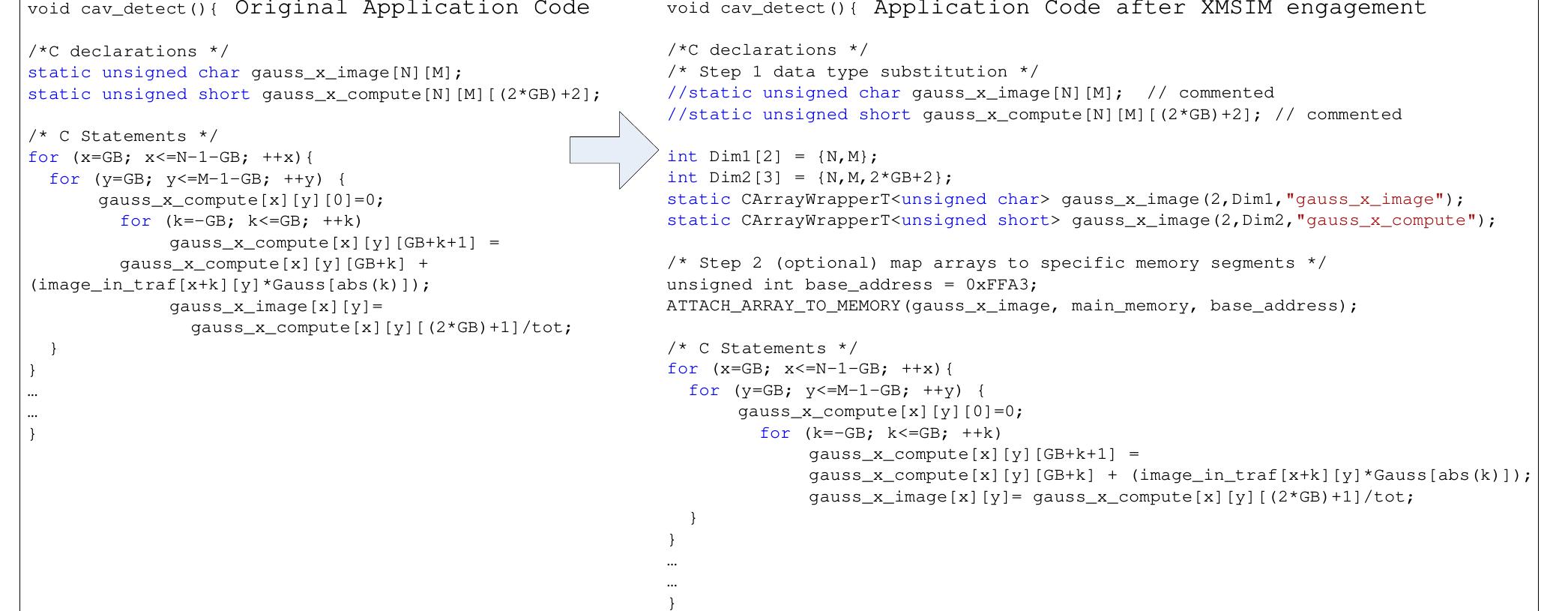

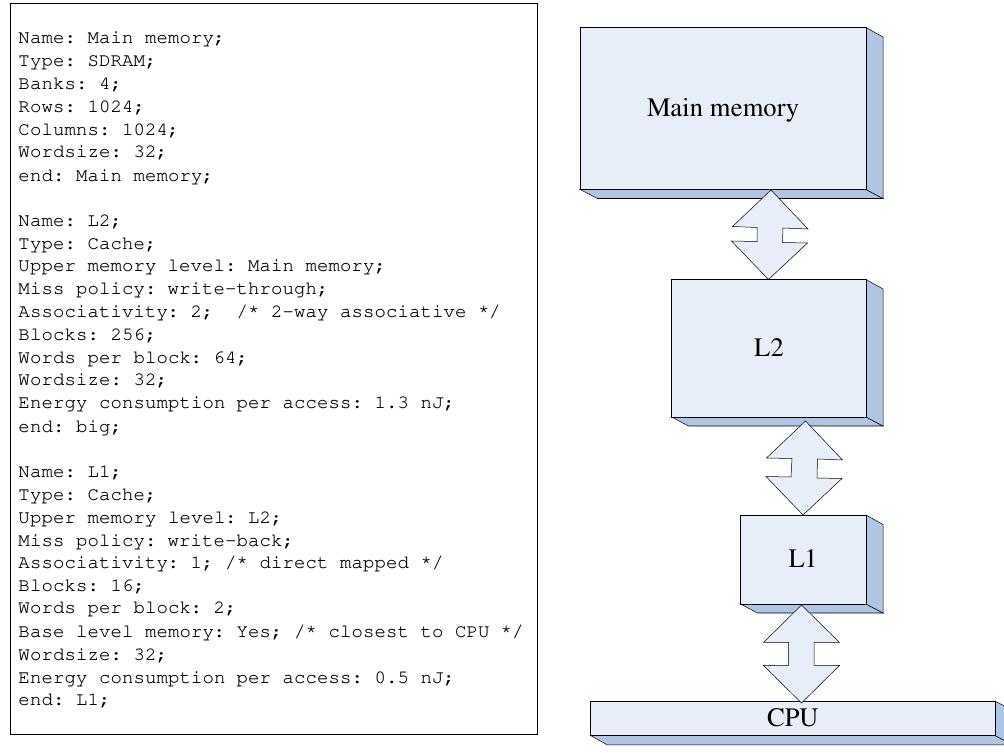

2. What methodologies enable early evaluation and optimization of memory hierarchies for multimedia and embedded applications?

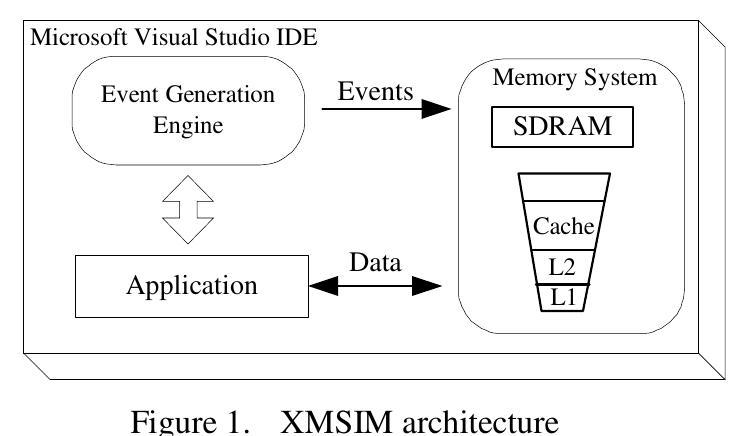

This theme explores simulators and frameworks designed to provide early-stage profiling and evaluation of memory hierarchy designs with a focus on embedded and multimedia applications. These tools emphasize integrating software code analysis with flexible memory mapping and parameterization, facilitating rapid feedback on how software transformations impact memory system behavior. Extensibility and user control over simulation parameters enable exploration of various memory hierarchy architectures and optimizations, improving design productivity and system performance particularly in resource-constrained environments.

3. How can integrated simulation infrastructures capture the interactions across the entire memory hierarchy including cache, DRAM, and emerging nonvolatile memories?

This research direction investigates comprehensive simulation platforms that co-simulate multiple levels of the memory hierarchy—from processor caches to DRAM and nonvolatile memory (NVM)—to analyze detailed interactions affecting modern multicore systems and exascale computing architectures. These integrated simulators enable realistic modeling of system software, operating systems, and applications interacting with heterogeneous hardware components, thus facilitating the study of performance, energy, resilience, and complex memory management policies in next-generation memory systems.

![The overall tool architecture gives prosperous ground to extend its functionality. Every class in both the Event Generation Engine and the Memory Model can be inherited by a user defined class to widen the functionality and simulation utilities. Hence, inheritance [11] provides event handling support necessary to trace data type access events and gives user defined opportunities to extend memory access analysis and behavior. Fig.3 summarizes the extensibility options of XMSIM. The designer may combine one of the Event Generation Engine components (base or extended) with one of the Memory Model’s. Totally there are 4 combinations by which the base and extended components can be combined. By extending the Event Generation Engine new opportunities of analysis emerge from the application’s data types. On the other hand by extending the Memory Model classes, memories with different characteristics and functions can be introduced. Moreover, new analysis scenarios can be built based on the XMSIM event driven concept.](https://figures.academia-assets.com/88567347/figure_003.jpg)

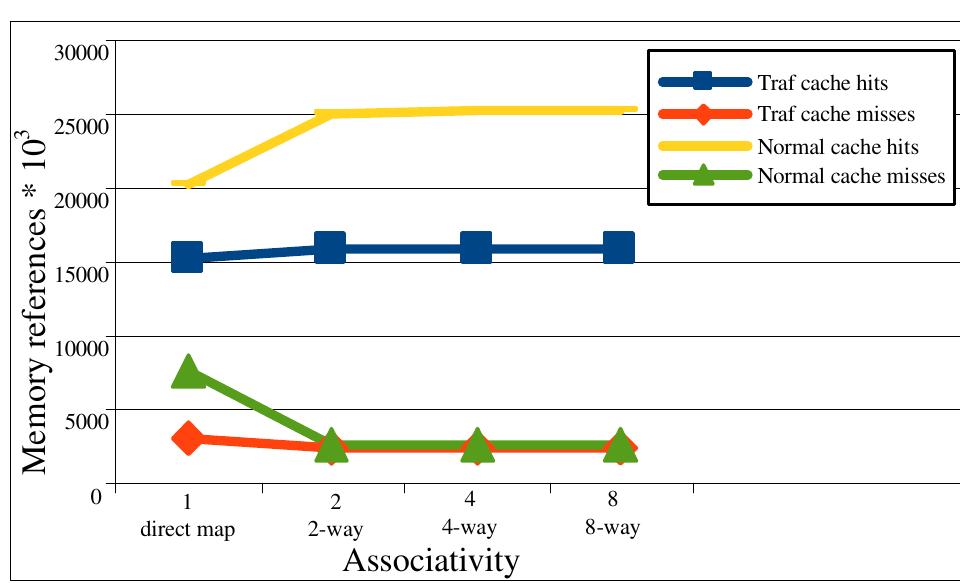

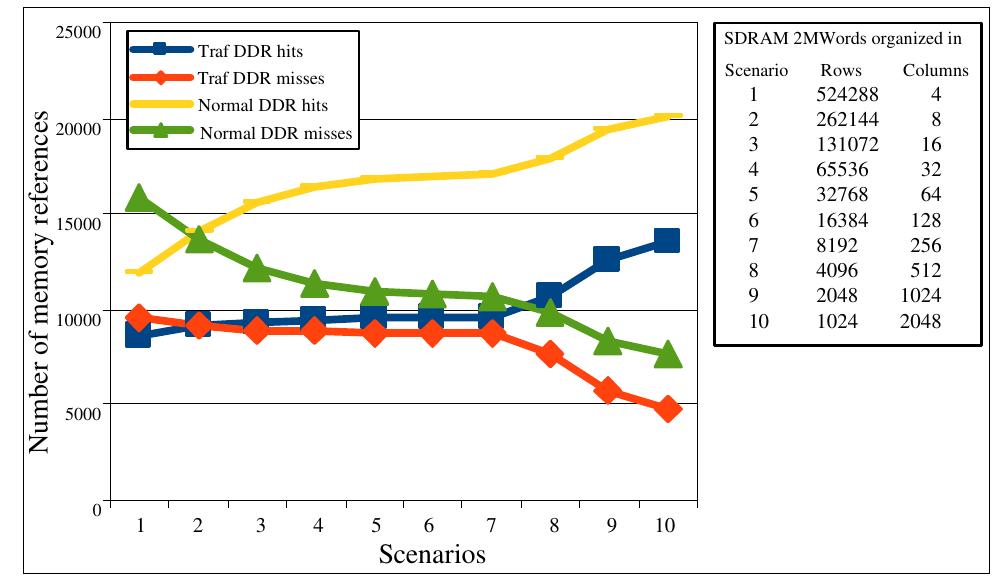

![while the optimized version of cavity has a lower cache activity because the sum of hits and misses is reduced in the case of the optimized version. Fig.10 illustrates cache performance for the initial and optimized (traf) cavity algorithm for one write through cache consisting of 256 block of 4 words, when sets are | (direct-mapped), 2(2-way associative), 4 and 8. Numbers are in thousands. We see a slightly better performance when sets are increased. Fig.11 depicts SDRAM row hits and misses [14] at various architectures for original and optimized cavity algorithm. From a range of 262144 rows by 8 columns up to 2048 by 1024 columns we see that hits constantly rise when the number of columns increases. Figure 9. Cache performance figures for various cache block configurations](https://figures.academia-assets.com/88567347/figure_005.jpg)